The hallucination gap: Why LLMs cannot judge creativity alone

A common misconception in the current AI wave is that Large Language Models (LLMs) are universal reasoners. Marketing teams assume they can simply feed a CSV of ad copy into GPT-4 and ask, “Rank these from best to worst.”

Research from the SOMONITOR framework (Yang et al., 2024) proves this is a dangerous fallacy.

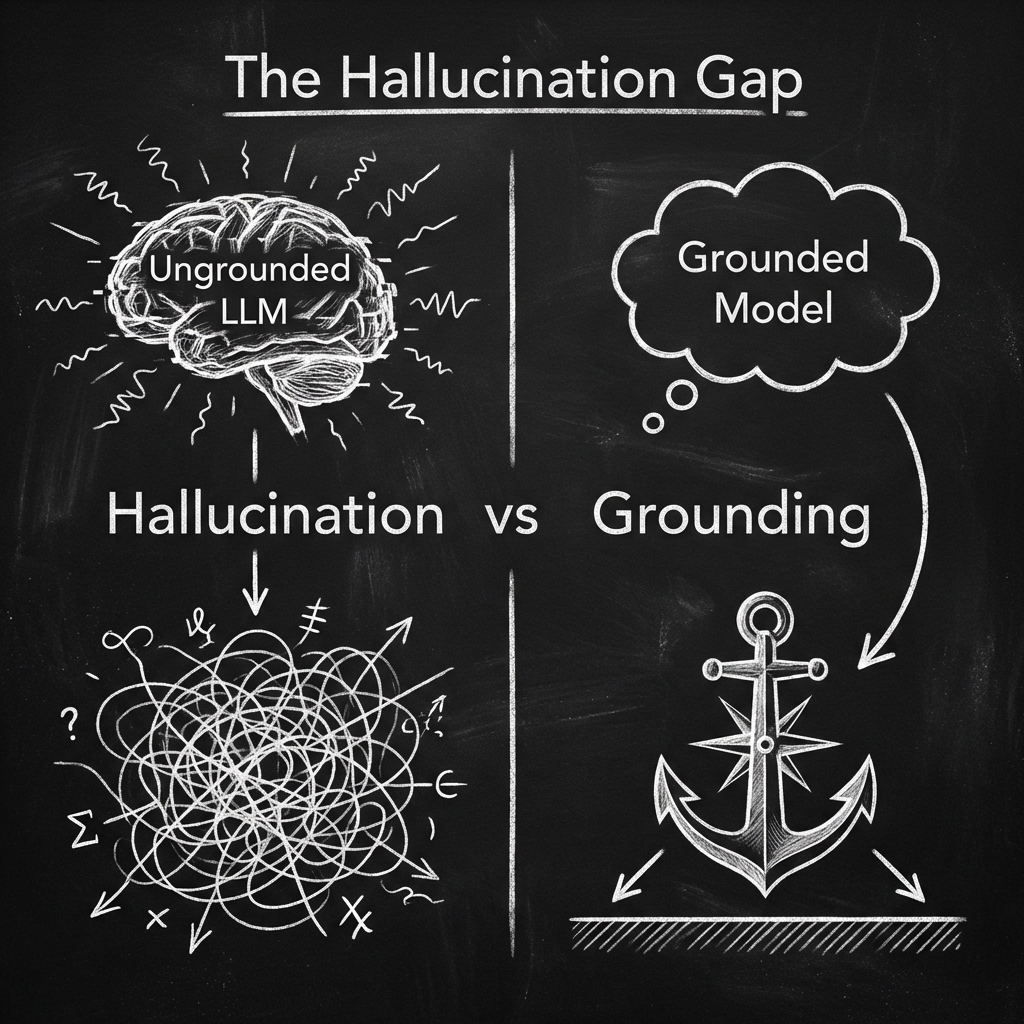

When asked to rank content performance without context, even state-of-the-art models exhibit a “Hallucination Gap”—they confidently provide rankings that have zero correlation with real-world performance.

The hallucination gap: Why LLMs cannot judge creativity alone

The core issue is that LLMs are trained on plausibility, not performance. An ad can be grammatically perfect and rhetorically sound (plausible) but fail completely in the market (performance). An LLM cannot “feel” the market; it can only simulate language.

To understand why this gap exists, we must look at the fundamental architecture of these models.

While recent advances have overcome the “quadratic complexity bottleneck” of the original Transformer architecture; allowing for context windows of millions of tokens; this computational scale does not equate to market intuition.

The model can read a million words of your competitor’s ads, but it cannot inherently know which one generated revenue.

The failure of ungrounded ranking

In the SOMONITOR study, researchers tested three state-of-the-art models GPT-4, GPT-4o, and Gemini 1.5 Pro on a ranking task for real-world ads across three distinct industries:

- Brand A (Fitness): 16 ads suited for sales objectives.

- Brand B (Education): 29 ads suited for lead conversion.

- Brand C (Automotive): 16 ads suited for traffic generation.

The experimental setup was rigorous. To prevent random variance, they set the model temperature to 0.1 and used an “LLM Ensemble” approach, running the ranking process five times and averaging the results.

The results were stark. Ungrounded models failed to place any high-performing items in their top recommendations.

- GPT-4 (Ungrounded): Recall@3 = 0.

- Gemini (Ungrounded): Recall@3 = 0.

They were essentially guessing. When asked to identify the top 3 best-performing ads from the batch, these trillion-parameter models scored a perfect zero. They hallucinated a “quality” metric based on linguistic elegance rather than performance reality.

The “Siren’s Song” of Hallucination

The paper cites the phenomenon of “Siren’s song in the AI ocean” (Zhang et al., 2023), where LLMs sound convincing but drift into fabrication.

In marketing analytics, this is catastrophic. If you ask an LLM to “audit your account,” it will likely praise the ads that sound the most poetic, not the ones that are actually converting. It creates a feedback loop of vanity metrics; our worst fear in marketing.

The solution (according to the paper): The Predictive filter (SODA)

The researchers propose that to make an LLM useful for strategy, you must first strip it of its autonomy in judging performance. You need a “Predictive Filter.”

The SOMONITOR framework introduces a specialized, quantitative model called SODA (from their previous work, “Against Opacity”, MM ‘23). Unlike an LLM, SODA is not a generative text model; it is a discriminative classifier trained on hard historical data.

How SODA works

The model treats content scoring not as a creative review, but as a ranking problem. It predicts a distribution over three labels: “High CTR”, “Average CTR”, and “Low CTR”. To make this usable for ranking, they add a linear layer that translates these probabilities into a single scalar score:

Score = α · p(HighCTR) + β · (1 − p(HighCTR))

By optimizing the weights α and β, they convert a classification model into a precise ranking engine. This model does not “read” the ad like a human; it scores the feature vectors.

This creates a “Hybrid Architecture”:

- The Scout (Predictive Model): SODA scores the content based on hard historical data. It answers “What works?”.

- The Interpreter (LLM): The LLM takes the high-scoring items and analyzes why they work. It answers “Why does it work?”.

Grounding the ghost: The “In-Context” breakthrough

The study found that when the LLM was “grounded”; meaning it was fed examples of known high-performing and low-performing ads with their actual ranks; its accuracy skyrocketed.

This is known as In-Context Learning. Instead of asking the model to guess, you provide it with a “Truth Anchor.” You show it: “Here is an ad that got a 2% CTR. Here is an ad that got a 0.5% CTR. Now rank the rest.”

The impact on performance was immediate and dramatic:

- GPT-4 (Grounded): nDCG@5 (Normalized Discounted Cumulative Gain) score jumped from 0.465 to 0.591.

- Recall@5: Increased from 0.4 to 0.6.

This grounding effect was consistent across all models (GPT-4o, Gemini 1.5 Pro). It confirms a critical mandate for AI adoption: Structure grounds the AI.

The implications for your stack

If you are building an AI marketing stack, you cannot rely on an LLM to be your strategist. You must build the structural scaffolding that holds it in place.

- Do not use ChatGPT for “Audits”: It cannot see your performance data unless you explicitly feed it the numbers.

- Build a Scoring Layer: You need a separate layer (like SODA or a custom regression model) to handle the math.

- Use the LLM for explanation, not evaluation: Once the Scoring Layer identifies the winners, use the LLM to explain the creative reasons behind the win.

The LLM is excellent at explaining the pattern, but terrible at finding it in the noise. When you combine the “Scout” (Predictive Filter) with the “Interpreter” (LLM), you close the hallucination gap and turn generative AI into a reliable engine for growth.

What i think: The rear-view mirror trap

While the research is sound, I must challenge the strategic implication.

The SODA model grounds the AI in historical CTR. It creates a “Rear-View Mirror” trap. Optimizing exclusively for what has worked, inadvertently builds a machine that penalizes what might work.

If you had tested an iPhone ad against Blackberry ads in 2006 using this model, SODA would have flagged the iPhone ad as “Low Probability” because it lacked a physical keyboard (a key feature of high-performing ads at the time).

Grounding prevents hallucination, but it also prevents revolution. Use this framework to optimize your “Business as Usual,” but never use it to launch a “Zero to One” product.

Once you have identified the high-performing content, how do you organize it? This requires a new protocol for structuring the invisible.

Share Article

If this article helped you, please share it with others!